Other recent blogs

Let's talk

Reach out, we'd love to hear from you!

Generative Artificial intelligence (GenAI) has recently witnessed many innovative breakthroughs, preparing organizations for absolute reinvention by unlocking enterprise intelligence, AI-powered business operations, and multisensory immersive experiences.

At the forefront of GenAI evolution, the Multimodal AI model stands as a testimony to these breakthroughs. As the name indicates, the system with multimodal capabilities seamlessly accepts and processes sensory user output prompted in multiple forms and delivers quick responses in real-time as insights and content.

Many C-suite leaders see Multimodal AI systems as the next big thing because they could be the eyes, ears, and brains behind generative AI. These systems learn to analyze and integrate data from multiple modalities, including text, images, speech, and video, and provide a richer representation of data that improves performance for different tasks.

Multimodal AI could touch upon areas as diverse as chatbots, data analytics, and robotics. Here’s the proof - according to this report, the global multimodal AI market size was estimated at USD 1.34 billion in 2023 and is projected to grow at a compound annual growth rate (CAGR) of 35.8% from 2024 to 2030.

Gartner reports that multimodal AI technology will have a "transformational impact" on the business world while redefining human-computer interactions—the broadening horizons of enterprise intelligence for businesses in 2025.

But what is Multimodal Data in AI?

Good question! Multimodal AI involves systems that process and synthesize information from various modalities such as text, images, audio, and video. Unlike traditional AI systems that perform exceptionally well in a specific domain, multimodal AI models provide contextual understanding by linking and analyzing different data streams.

For example, a medical AI application may assess a patient's spoken symptoms (audio), historical medical records (text), and diagnostic images (visual) to deliver precise outcomes.

The main components of Multimodal AI are:

- Modality integration involves relating input from multiple sources. For instance, if the model is processing a video, it would recognize visual cues, which may be gestures associated with the audio context of speech.

- Cross-modality learning enables one modality to fill gaps in another. Using textual captions can add insight if an image lacks clarity.

- Unified models belong to systems like OpenAI’s GPT-4 Vision, which can interpret text alongside images, exemplifying how multimodal AI enhances versatility.

Key advancements driving Multimodal AI in 2025 for enterprise intelligence

The year 2025 marks a new age in artificial intelligence, focusing attention on multimodal AI. Multimodal AI is a system that synthesizes and analyzes different data types; this makes the interaction much more intuitive and human-like, unlike traditional systems, which process information from one single source.

Multimodal AI redefines all that the horizon of health, education, entertainment, and more entails to present them as seamless and intelligent solutions that industries request. Some significant updates include:

- Generative models emerged: With ChatGPT and DALL·E breaking the generative models to grand success, these multimodal AI advanced combinations now exist. Text images and audio generating power are all present within some of these AI architectures.

- AI architecture innovations: Transformer architectures that originated in NLP can now handle multimodal inputs of any complexity. For example, a transformer should be able to take in a textual question and respond with the same visual information (e.g. what objects are in an image).

- Data available and processing power: Virtually every organization has massive databases of multimodal data, for video and audio, such as the YouTube-8M found at Google, and images, as within OpenImages. These huge models train and deploy extremely computationally efficient frameworks.

Multimodal Data in AI real-world applications across industries

Multimodal AI, which integrates and processes data from multiple sources (e.g., text, images, speech, and video), is rapidly transforming various industries by enhancing decision-making, improving user experiences, and driving innovation.

Organizations can develop more sophisticated and context-aware AI solutions by leveraging data across modalities. Below is a detailed exploration of how multimodal AI is being applied across key industries:

- Healthcare

In healthcare, multimodal AI has great promise to improve patient outcomes further and optimize the care process. The clinician relies on many forms of data to diagnose patients and create treatment plans. These include medical images, such as X-rays or MRIs, patient records, lab results, and cliniIntegratingintegrating disparate d allows multimodal AI systems tosystems can provide more accurate and comprehensive analyses.

For instance, AI models can analyze radiology images along with the text report to automatically detect anomalies like tumors or fractures. Even patient history and lab results can be integrated with imaging data to predict the progression of diseases like cancer or Alzheimer's, thereby giving treatment recommendations.

The multidegree AI shall go a long way to supplement telemedicine by even real-time analysis of video consultancy by letting the system assess all these, that is patient's facial expressions, tone, and word said that may depict something about his health situation inapparent with text information only.

- Retail and e-commerce

Multimodal AI in the retail and e-commerce industries is revolutionizing how businesses interact with customers and enhance their shopping experiences. The most widely used is visual search and recommended engines where customers can upload a photo or use their camera to look for a product. It combines recognition and text-based product descriptions and user reviews for the best possible accurate and context-aware product recommendations.

The simplest example would be when an individual wants to buy some shoes; a multimodal AI system may analyze this picture of shoes taken and give a similar product based on availability in the physical location.

Other AI-based e-commerce virtual assistants, also known as assistants, use speech recognition and natural language processing to aid customers in querying products or order details. They even analyze shopping preferences, history, or previous purchases. In addition, the multimodal AI in retail improves in-store experiences through voice monitoring of customer behavior and feedback on mobile applications or social media posts. Retailers monitor customer emotions, preferences, and reactions to certain products to improve them for improvement.

- Autonomous vehicles

Multimodal AI is essential for developing autonomous vehicles in the transportation industry. Self-driving cars use a combination of sensors, cameras, LiDAR (Light Detection and Ranging), GPS data, and radar to navigate the environment. Only by processing and integrating these various data streams can the vehicle better perceive its surroundings and make safe, informed decisions in real time.

For example, a multimodal AI system in an autonomous car takes visual data from cameras that could detect objects like pedestrians, vehicles, and traffic signs. It can be used to detect the distance and speed of other vehicles, and create a 3D map of the environment to get the complete understanding of data integration. Multimodal AI enhances both the driving experience and operational efficiency by integrating voice commands and real-time traffic data into the system.

- Education

In education, multimodal AI is revolutionizing how students learn and how teachers deliver instruction. AI-powered learning platforms that incorporate text, images, video, and audio cater to diverse learning styles and help engage students in a more interactive manner. For example, a multimodal AI system might provide a student with a combination of text-based content, images, and video tutorials to explain complex topics in science or mathematics, ensuring that students with different learning preferences can better understand the mater, multimodalmore?

Multimodal AI is being used in assessments and feedback systems. By analyzing both spoken and written responses in language learning apps or online exams, AI can assess pronunciation, grammar, and sentenreal timeure in real-time, offering personalized feedback to students. Teachers can also use AI-driven platforms that integrate multimodal data to track student progress and offer tailored learning resources.

In addition, multimodal AI is enabling immersive learning experiences through virtual and augmented reality (VR/AR), where students can interact with 3D models, hear contextual information, and see virtual environments. This combination of modalities fosters active engagement and enhances the learning experience.

- Customer service and chatbots

Significant impact on customer service, particularly through the use of advanced chatbots and virtual assistants. These AIs can handle customer inquiries across multiple channels, such as voice, text, and even visual interfaces. By integrating speech recognition, NLP, and image recognition, multimodal chatbots can respond to customer queries more effectively and provide richer, contextually relevant answers.

For example, a customer service chatbot in a retail setting can use speech recognition to understand spoken queries about product availability, while simultaneously analyzing product images or browsing data to provide visual recommendations. These systems also use sentiment analysis to assess customer emotions, adapting their responses to ensure a positive customer experience.

In call centers, multimodal AI can analyze both the tone of a customer’s voice and the content of their conversation to detect frustration. By combining multiple input forms, multimodal AI enhances the overall customer experience by delivering more accurate, responsive, and human-like interactions.

- Manufacturing and industrial automation

Multimodal AI is being increasingly applied in manufacturing and industrial automation to improve efficiency, safety, and quality control. In smart factories, AI systems integrate data from cameras, sensors, and sound analysis to monitor equipment, detect anomalies, and optimize production processes. For example, AI models can analyze video feeds from surveillance cameras to identify production line issues while simultaneously using sensor data to track machine performance.

Furthermore, AI machines can uto monitor that monitors machinery and detect early indications of mechanical failure, which may include unusual sounds or vibration. By integrating these different data sources, manufacturerequipment breakdownsn of equipment, manage maintenance schedules, and rethroughas a result of more effective operations and cost savings.

In quality control, multimodal AI can combine visual inspection (through cameras) with data from other sources, such as sensor readings or user feedback, to identify defects in products and ensure that manufacturing processes are running smoothly. This integration of modalities enhances precision and decision-making, ensuring that high-quality products are consistently delivered.

- Security and surveillance

This trend is observed in security and surveillance systems, where it can be used to scan video feeds, audio recordings, and sensor data to look for threats, suspicious behavior, and respond to emergencies with much greater efficiency. Surveillance systems may traditionally analyze visual data, but multimodal AI can further improve this through audio recognition (such as identifying gunshots or distress) and even behavioral analysis (like identifying unusual movement).

For instance, AI-powered surveillance systems can identify a person's facial features, recognize unusual behavior, and listen for spereal timends in real-time. Such systems can then trigger alerts to security personnel or law enforcement. In sensitive areas, such as airports or critical infrastructure, multimodal AI systems can combine data from multiple sensors and cameras to provide enhanced security. Furthermore, AI-based video analytics can allow security teams to receive actionable insights through AI-powered capabilities, for example, moving objects or individuals, through a series of camera angles for accuracy assessment.

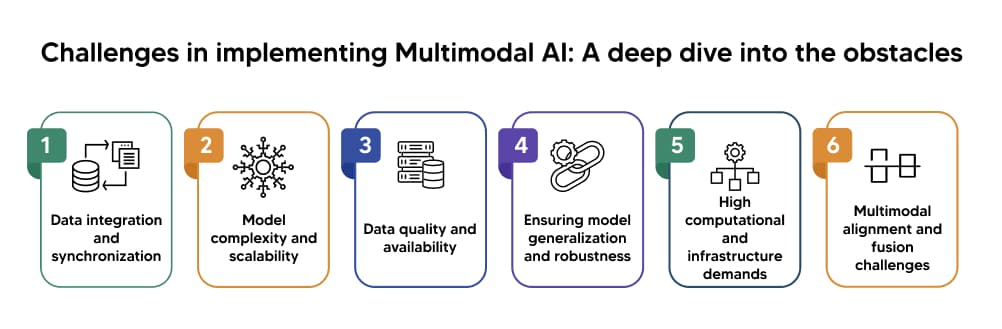

Challenges in implementing Multimodal Data : A deep dive into the obstacles

Despite its enormous potential, developing multimodal AI is difficult; however, implementation raises a number of problems for an organization to meet while trying to deploy these solutions successfully. These extend in nature from technical data issues, ethical concerns to purely operational problems. Below are the critical problems that could confront organizations while integrating multimodal AI systems:

- Data integration and synchronization

This is one of the primary challenges with the application of multimodal systems: integrating and synchronizing data from different sources, whereas traditional AI models would be working with a single type of data, either text, image, or audio. Suppose, if the visual data of an image is combined with the textual data of a caption or audio data. In that case, speech will likely cause misalignments if the data comes from different sources at different times.

Achieving consistency among modalities is daunting because each type of data differs in structure and format. Data preprocessing becomes critical to ensure that data from multiple sources can be aligned and mapped into a unified framework. With proper synchronization, the AI model can make accurate predictions and learn relevant patterns across modalities.

- Model complexity and scalability

Most developments in multimodal AI systems have high complexity requirements with integrated models that combine the processes of different types of data being operated upon simultaneously. Developing these models requires intensive computing and expertise at a much advanced algorithm level. Training is very compute-intensive, depending on a massive scale for processing such capabilities, and can also necessitate powerful hardware infrastructures such as high-performance GPUs or even distributed computing systems.

As the models grow more complex, so too do the scalability challenges. The biggest issue with multimodal models is that they tend to consume a lot of resources due to the massive processing requirements of large quantities of heterogeneously sampled data. Real-time multimodal model deployment can, in effect, be problematic. Therefore, scaling these models up in terms of increased size and production deployment with the minimal impact on performance continues to challenge many organizations.

- Data quality and availability

For multimodal AI systems to work effectively, they need access to high-quality, labeled datasets across multiple modalities. However, obtaining and curating such datasets is often a time-consuming and resource-intensive task. Data for some modalities, such as video and audio, can be especially challenging to label and annotate accurately, as it requires a deep understanding of context and content.

Moreover, data privacy and copyright concerns also come into play, especially when dealing with sensitive or proprietary information. Organizations must ensure that data is collected, stored, and used in compliance with regulations like GDPR or CCPA. Limited access to well-annotated, diverse datasets may hinder the development of robust multimodal AI systems, and data scarcity is a persistent challenge in many industries.

- Ensuring model generalization and robustness

Multimodal AI models must be able to generalize well across different contexts, environments, and real-world scenarios. However, training a model that performs consistently across multiple modalities can be difficult. Models trained on specific datasets may not generalize well to new, unseen data, leading to overfitting or poor performance in real-world applications.

For example, a multimodal AI model trained to analyze facial expressions and speech patterns in one cultural context may struggle to generalize when applied to a different cultural setting. This issue arises because the context in which multimodal inputs are presented can significantly influence how data is interpreted. Addressing these generalization issues requires robust testing and validation across diverse datasets to ensure the model's performance is reliable and consistent.

- High computational and infrastructure demands

Multimodal AI systems often require significant computational resources due to their complexity and the volume of data they handle. These systems need powerful hardware, including Graphics Processing Units (GPUs) and specialized accelerators, to train and run models efficiently. Additionally, the infrastructure required to store and process vast amounts of multimodal data (e.g., video, audio, and text) needs to be both scalable and secure.

For organizations without access to high-performance computing (HPC) infrastructure or cloud-based solutions, the costs and maintenance of such systems can be prohibitive. The computational load required for training multimodal models can also lead to longer development cycles, delayed project timelines, and high operational costs.

- Multimodal alignment and fusion challenges

One of the more nuanced challenges in multimodal AI is the fusion and alignment of data from multiple sources. The process of combining different modalities, such as text, images, and speech, is not always straightforward. Each modality may have its own specific characteristics, features, and methods of representation, which can complicate how the model processes and synthesizes this information.

Aligning and fusing multimodal data effectively requires sophisticated techniques to handle the different data formats, resolutions, and timeframes. For example, when combining visual and text data, the AI model must consider not only the content of the image but also the relationships between them, such as understanding a text caption in the context of the visual. Without effective fusion mechanisms, the AI may fail to extract meaningful information, undermining its performance.

How to prepare for the Multimodal AI revolution?

As muldrivesrce driving innovation in 2025, individuals and organizations must strategically prepare to harness its potential. The following approaches can help ensure readiness and maximize the benefits of this transformative technology:

- Upskilling in AI and related fields

The demand for professionals skilled in multimodal AI is rapidly increasing. To stay ahead, it is important to master foundational concepts and gain expertise in artificial intelligence subfields like natural language processing (NLP), computer vision, and audio processing. Platforms like Coursera, edX, and specialized bootcamps offer courses tailored to these domains.

It is equally prerequisite to learn Multimodal AI tools and familiarize yourself with cutting-edge frameworks like PyTorch and TensorFlow for developing and training models; Hugging Face Transformers, which support multimodal mode; andation as well as OpenAI’s CLIP (Contrastive Language-Image Pretraining) for text-image understanding.

Additionally, build practical skills by working on multimodal AI applications, such as creating systems that combine speech and visual data for real-time captioning.

- Investing in AI-ready infrastructure

Organizations must establish robust infrastructure to accommodate the demands of multimodal AI. Ensure you have access to large, diverse datasets across multiple modalities (e.g., text, audio, video). Use platforms like Kaggle, YouTube-8M, or OpenImages to source data for training and testing models.

Second most important step is Cloud and Edge Computing. Leverage cloud platforms like AWS, Google Cloud, and Azure for scalable computing resources. For real-time applications, edge devices such as IoT sensors and smart devices should integrate multimodal AI capabilities.

Don’t forget to equip your teams with tools that support the processing and analysis of multimodal data, such as Microsoft Azure AI, NVIDIA Clara, or IBM Watson.

- Building multidisciplinary teams

To effectively implement multimodal AI, organizations need to build multidisciplinary teams that bring together experts from various domains such as computer vision, natural language processing (NLP), audio engineering, and data science.

These teams should collaborate to design and deploy systems that leverage multiple modalities for more comprehensive and intelligent outcomes. Encouraging cross-training within teams can foster a deeper understanding of how different modalities interact, enabling smoother integration of diverse technologies.

Moreover, involving ethicists and social scientists in these teams is crucial to ensure that ethical considerations, such as fairness and privacy, are integrated into the development process.

- Creating ethical AI policies and practices

As multimodal AI systems become more prevalent, addressing ethical concerns is essential. Organizations must prioritize bias detection and mitigation by developing algorithms that can identify and reduce biases within the data, ensuring equitable outcomes for all users.

Furthermore, the growing use of multimodal systems raises significant data privacy challenges, requiring businesses to adopt robust security measures, such as anonymization and secure storage practices, to protect user information. Adhering to privacy regulations like GDPR and CCPA will be crucial in maintaining compliance and safeguarding user rights.

Creating transparent and interpretable AI models that clearly explain their decision-making processes will also help build trust and accountability in these systems.

- Exploring industry-specific applications

Multimodal AI will have varying implementations across different sectors, and companies need to study how this technology can be designed to respond to a given sector. In health, for instance, an AI system could integrate patient records, diagnostic images, genetic information, and more into an integrated service to ensure better patient treatment and a better health outcome.

Conclusion

Multimodal AI is a new era of technological possibilities wherein the strengths of individual modalities are combined into coherent, intelligent systems. In 2025, this technology will transform industries, enhance human-computer interactions, and nudge us closer to the AGI dream. By attacking challenges and preparing for integration, businesses and individuals can unlock its potential, ensuring a smarter, more connected future.