Other recent blogs

Let's talk

Reach out, we'd love to hear from you!

What is Big Data? Dynamic, huge and disparate data generated by people and machines is termed as Big Data. You require innovative and scalable technologies to gather, host and analyze vast pool of data to derive real-time insights related to customers, profit, risks, performance, productivity levels, and stakeholder value.

Information includes structured as well as unstructured data; you can collect information from social media, devices connected over the Internet, voice recordings, machine data, videos, and many other sources.

The 4 Vs of Big Data include:

- Volume: Amount of data created is huge compared to traditional sources of data.

- Variety: You gather data from different sources; data is being generated by people and machines.

- Veracity: The quality of big data needs to be tested thoroughly to gain useful insights.

- Velocity: Data creation is taking place rapidly – the process is never-ending, even when you are asleep.

To manage huge volumes of data, you require efficient system of business analytics in place.

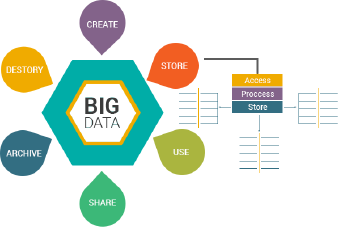

Simply put, Big Data Lifecycle Management along with data governance methods is carried out in the following stages:

1. Creation: Firstly, data has to pass the robust firewalls of the organization’s website. The process, termed as Data Capture helps in creation of data values that neither existed or yet to exist in the enterprise. You can capture data in the following ways:

- Data Acquisition: Using existing data produced by an external organization

- Data Entry: Creating new data sets for an organization through human operators or devices generating enterprise data

- Signal Reception: Data captured from devices, especially from control systems is gaining importance with IoT

2. Maintenance: Data maintenance can be defined as supplying data points to support usage and synthesis of data, ideally a tailored solution for business processes. It involves methods of data processing without deriving any specific value for your enterprise. Tasks include transfer, integration, refining, and transforming data. Data maintenance focuses mainly on data management activities; hence it poses huge challenge to data governance. An important thing for enterprises to remember is deriving a rationale about how data is supplied to end points, thereby avoiding proliferation of data transfers.

3. Data Synthesis: It is a new and not-so-common stage in Big Data Lifecycle Management process. It refers to creating data values through logic when using other data inputs. This area of big data analytics uses process modeling for making business decisions. It does not involve methods of deductive logic i.e. a top-down reasoning process where a conclusion is derived based on combination of multiple assumptions that are true. For example, if A=B, B=C, then A=C. Contrarily, inductive reasoning involves expert experience, opinion, or judgment to derive a logical solution, for instance creation of credit scores is inductive logic.

4. Data Usage: Application of big data as information in tasks run and managed by the enterprise itself. It involves tasks outside the lifecycle. Data becomes a central point of a business model in this case. There are regulatory constraints of using data; data governance ensures these regulations are followed.

5. Data Publication: This stage involves sending organizational data outside the enterprise location.

6. Archiving Data: It refers to copying data in a stored environment to be reused for active production activities. Data archives only store data and do not take care of maintenance, publication or usage activities.

7. Purging: It refers to removing every copy of data present in the enterprise. The objective is met by working on data archives. Although, it is easy and affordable to capture, process, store data, you will not find it useful unless it yields relevant information. Moreover, the information must be accessible to the right audience who can use the data to arrive at insightful decisions and successful business outcomes.

Three major enablers of big data analytics include:

- Mobile networks allow easy distribution of real-time information

- Interactive technologies help in creating visual data in order to review complex data sets and present useful business insights

- You require technical specialists who are able to handle these complexities and simplify outcomes for daily use.

Despite efforts of capturing and assembling data, companies are posed with challenges of acquiring concrete value from collected data to transform operational processes. You must devise strategies to overcome these challenges and support business adoption.Collect insights on:

- Focus on the need for a big data platform and attributing value to analytics

- Negotiating executive demand for resolving day-to-day challenges with your data analysis team

- Translating data into a practical marketing tool

- Enable seamless extraction of valuable data within the organization.

Most of the enterprises realize that converting big data into big insights is crucial for future success, but they are not quick in implementing these solutions because of operational procedures and workflow issues.The major issue lies with data analytics workflow which involves functions of loading, ingesting, manipulating, transforming, accessing, modeling and, last but not least, visualizing and analyzing data.

Manual intervention is essential for every step of data analytics involving manual coding, testing errors and further delays. However, technologies like NoSQL and Hadoop require technical expertise and specialized skills. Once you collect and prepare the entire data, you require additional resources for data collection, starting the linear process again from scratch.

Keeping in mind the potential challenges that can arise while managing and implementing big data solutions, enterprises must adopt easy-to-use IT solutions to address burning issues that will also grant flexibility to address future challenges as well. Solutions need efficient data integration using data visualization and exploration tools to support existing and future sources of data.

Reducing Complexities of Big Data

Big data can turn into a big mess. It brings up new challenges for enterprises; collecting data from different data sources and analyzing it accurately is tough. A huge amount of information is present across data silos of your enterprise, which is not put in use by your applications and business units.

Big data is more complex to use compare do conventional data sets because you have to handle more data, sources, and structures. Finally, big data platforms are not governed by industry standards or policies for managing this complexity. Hence, the data is questionable and might disappear faster than you manage it.

You can implement following measures to address the complexity of using big data.

- Prioritize your business critical data i.e. data related to customers, products, sites, suppliers, etc.

- Deliver insights for one of the customer profiles; collect information from external as well as internal sources about that particular customer.

- Update, collate, and refine data related to that profile to create a uniform profile as the master profile.

- Enter that information in all your applications, operational systems, and analytics

- Identify a critical relationship between your entire set of business critical data.

- Repeat the process regularly for resolving data complexities.

Customer 360 Experience – New Dimension of Big Data

Fast-moving enterprises are focusing on providing customer satisfaction as their primary business goal. Technology is helping them with the emergence of mobile devices, video customer service, online chat communities, social media channels, etc. have become personalized touch points for any business. Customers, now, can interact with businesses directly; hence aggregating data from various customer interactions has become difficult.

Modern enterprises are utilizing a wide variety of tools for developing a 360-degree customer experience; social media tools help gather customer feedback from Facebook or Twitter, predictive analytics determines customer preferences for next purchase, CRM suites, and many more.

Hence, it is crucial for businesses to integrate their software applications with such interactive networking platforms that enable information sharing and an accurate analysis of customer needs. In few cases, you may have to use application programming interfaces (APIs) to create applications that share data. Data cleansing and quality practices will be essential to understand customer needs accurately; what is the current data, it should not be conflicting or duplicate.

360-degree customer experience needs a well-defined big data analytics strategy that caters to structured (data existing in organizational databases) along with unstructured data (data existing on social media platforms). Many enterprises are developing business intelligence systems that will combine data from both these sources and store it in a central hub.

Designing a 360-degree customer experience would require businesses to align objectives with business strategy. A successful strategy emphasizes on the following points:

- Observing the current trends

- Customer engagement through discussion forums or communities to devise a competitive strategy

- Acting upon customer feedback, given by the means of suggestions or comments, to promote business growth and scalability

Your 360-degree customer experience campaign will be successful only if you consider each and every customer feedback seriously; all participants in this ecosystem must contribute at any point of time to enhance business growth. Technology offers several solutions to enterprises for collecting useful data and analyzing it into meaningful insights for business success.

Now that you have learnt about tips of reducing complexities of big data, it will be easier to implement disruptive technology and develop smarter enterprise solutions to maintain the competitive edge.